Compressor Microbenchmark: LevelDB

Symas Corp., February 2015

Test Results

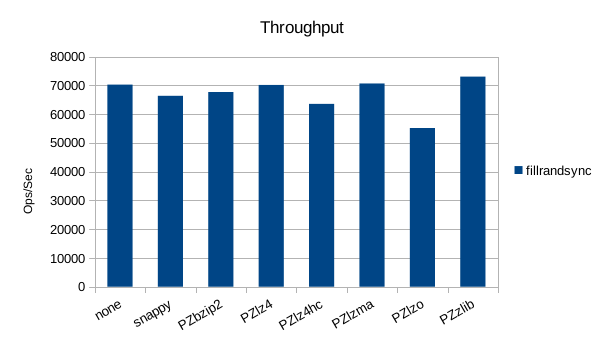

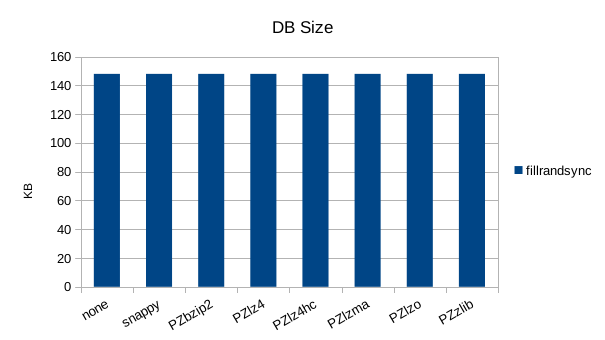

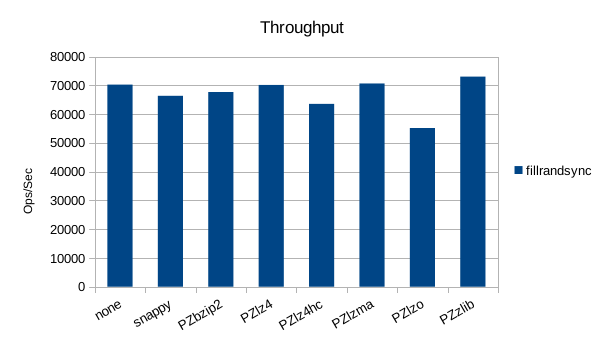

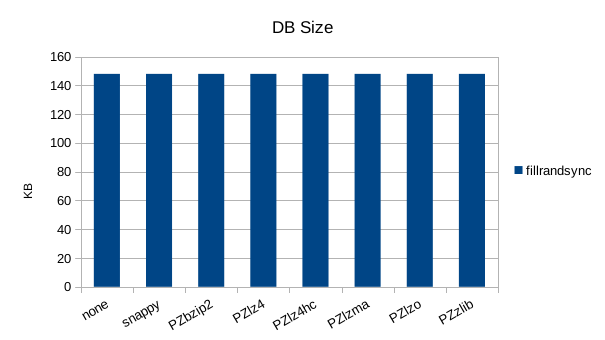

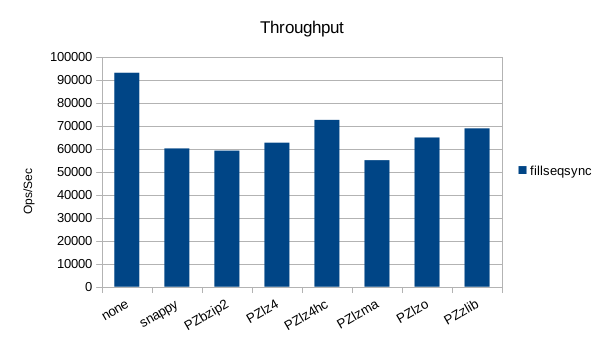

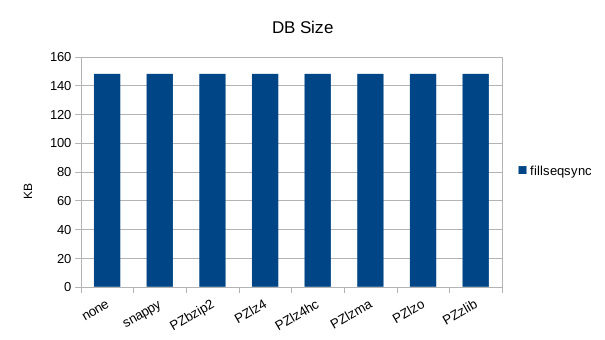

Synchronous Random Write

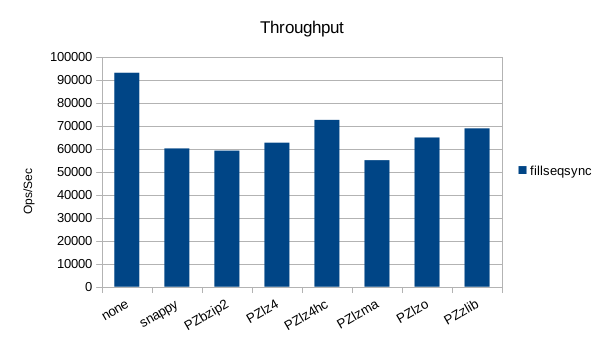

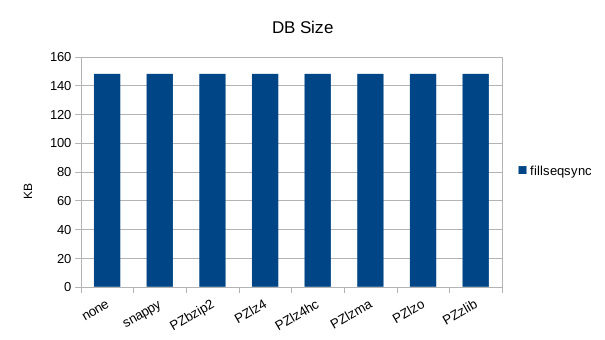

The synchronous tests only use 1000 records so there's not much to see here. 1000

records with 100 byte values and 16 byte keys should only occupy 110KB but the size

is consistently over 140KB here, showing a fixed amount of incompressible overhead

in the DB engine.

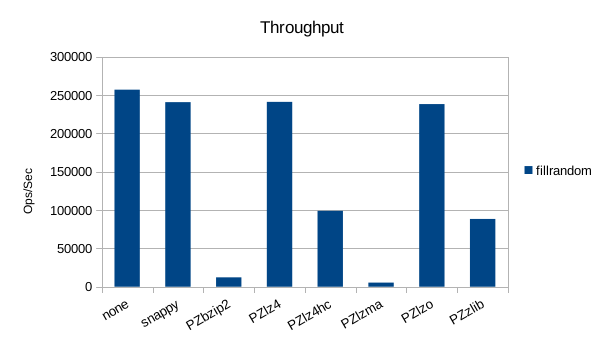

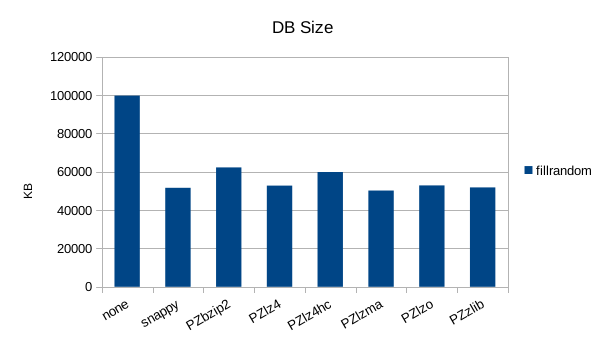

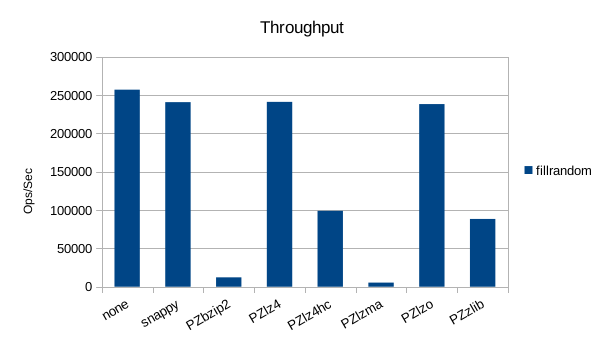

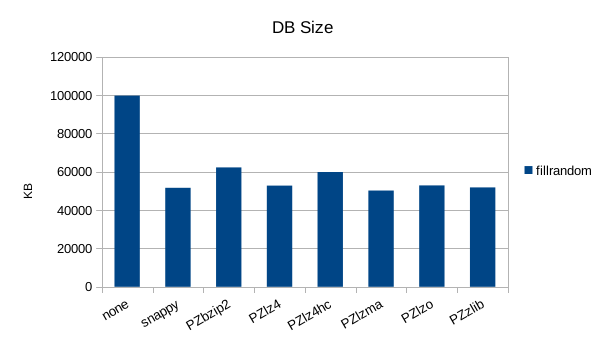

Random Write

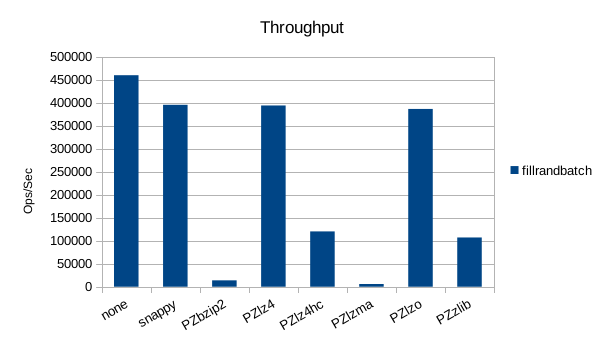

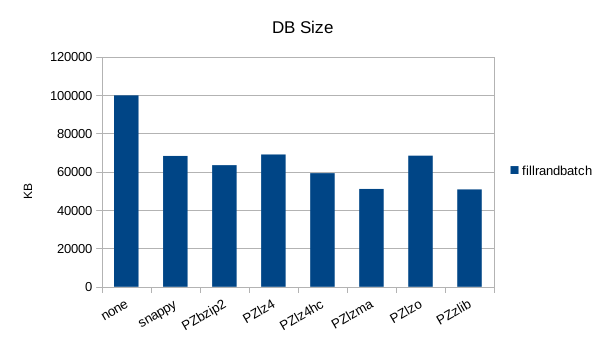

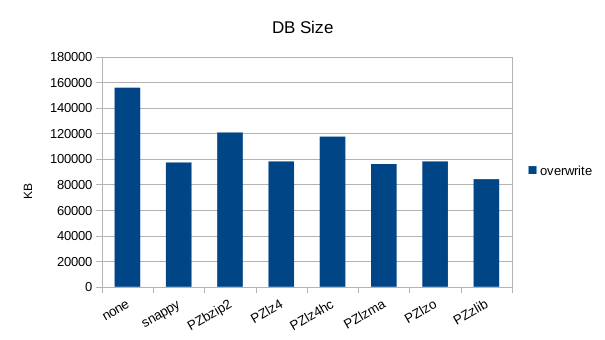

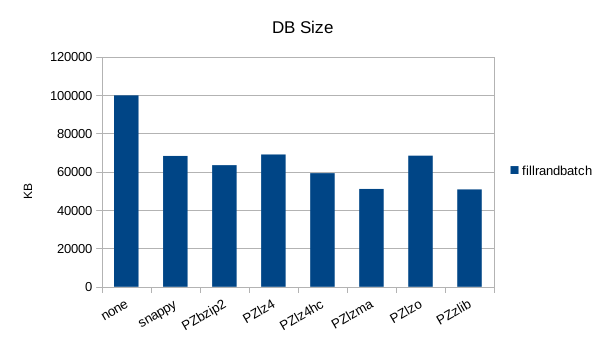

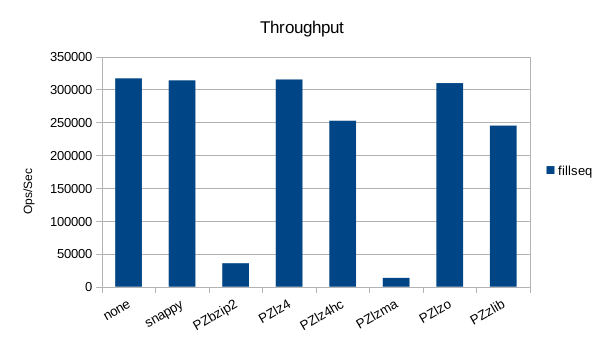

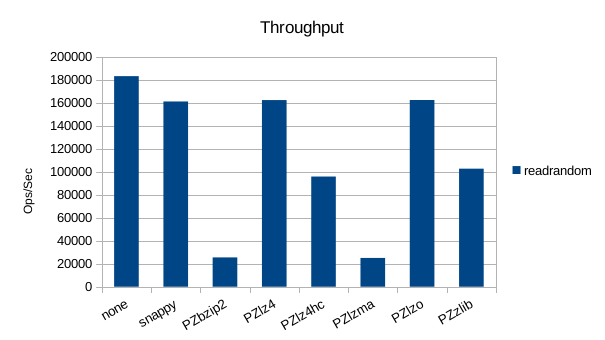

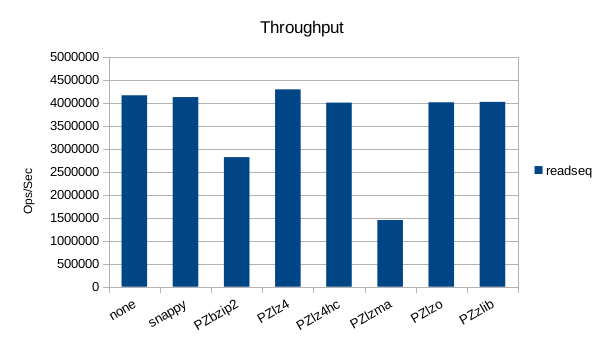

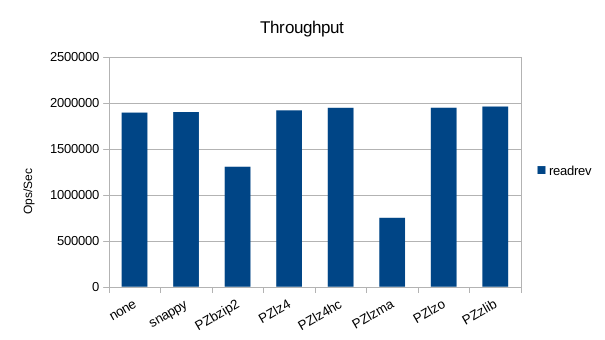

The asynchronous tests use 1000000 records and show drastic differences in

throughput, and notable differences in compression. The general trend for

all the remaining tests will be that snappy, lz4, and lzo are better for

speed, while zlib is better for size.

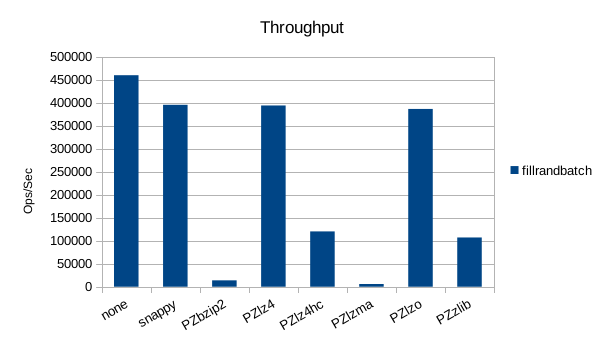

Random Batched Write

Synchronous Sequential Write

The synchronous tests only use 1000 records so there's not much to see here.

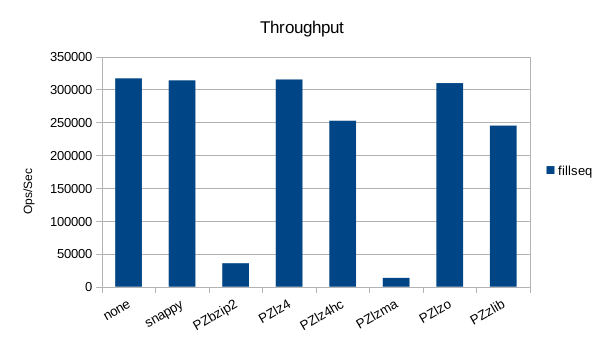

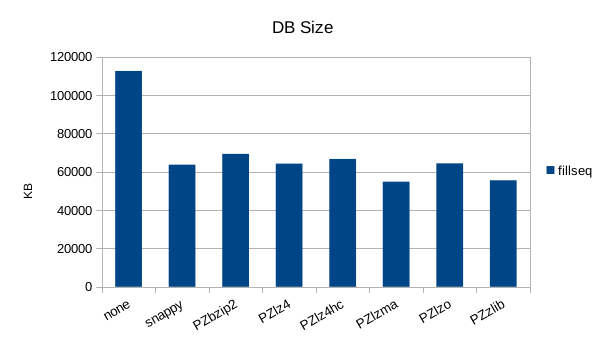

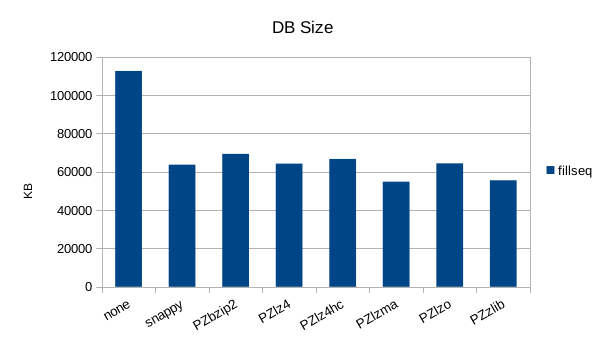

Sequential Write

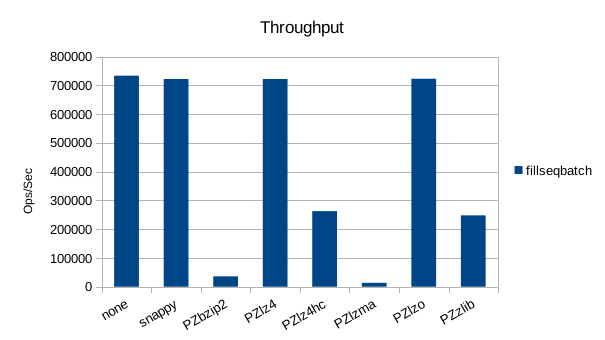

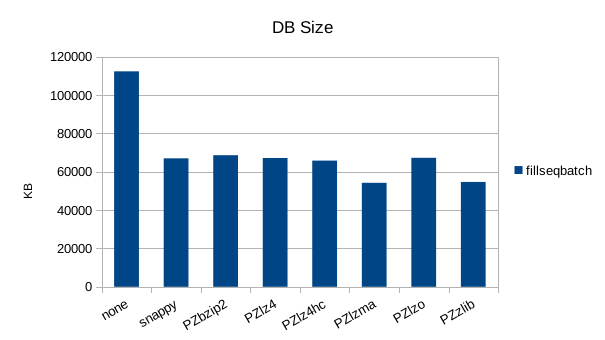

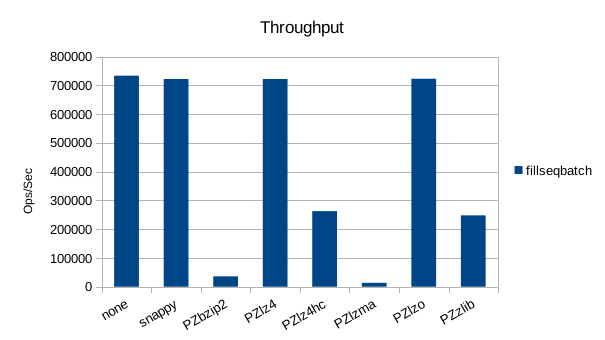

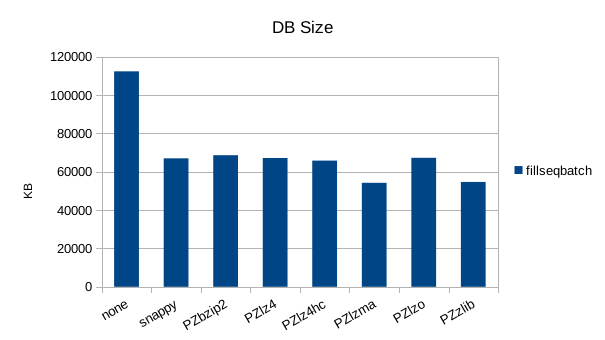

Sequential Batched Write

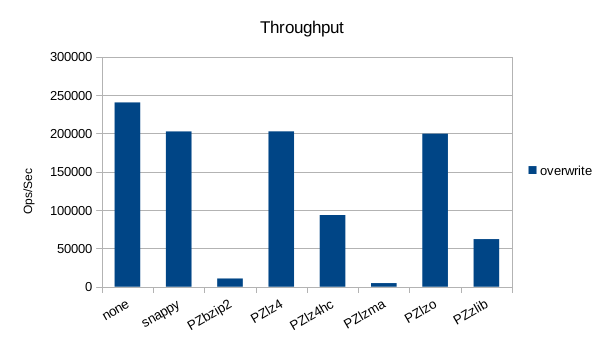

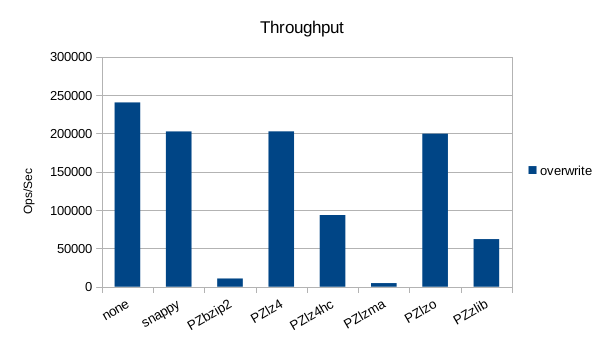

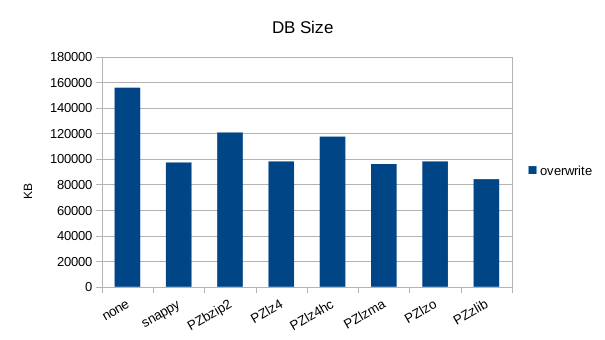

Random Overwrite

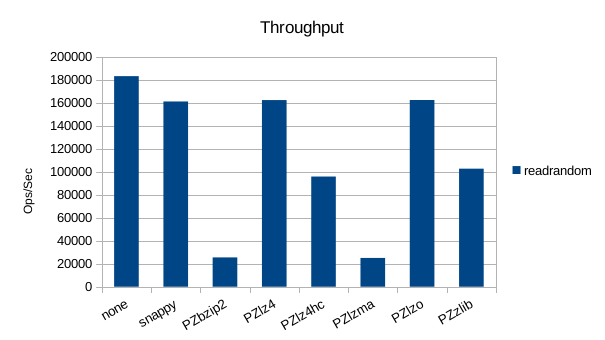

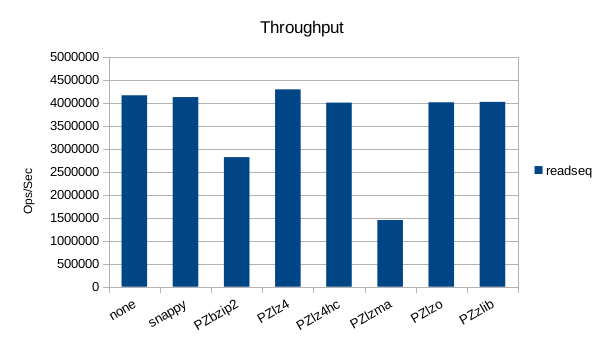

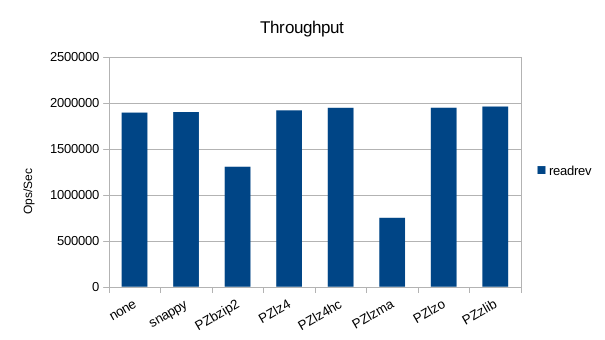

Read-Only Throughput

Summary

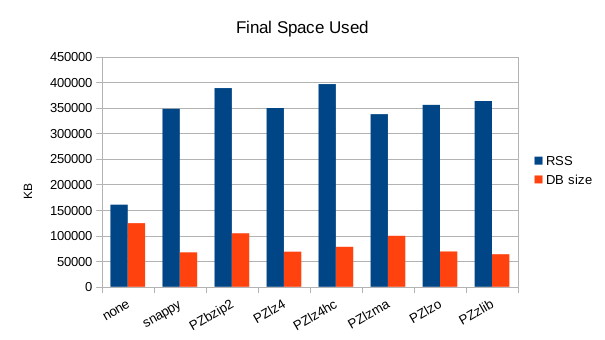

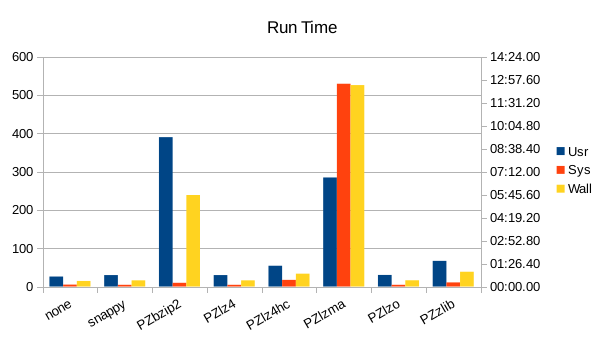

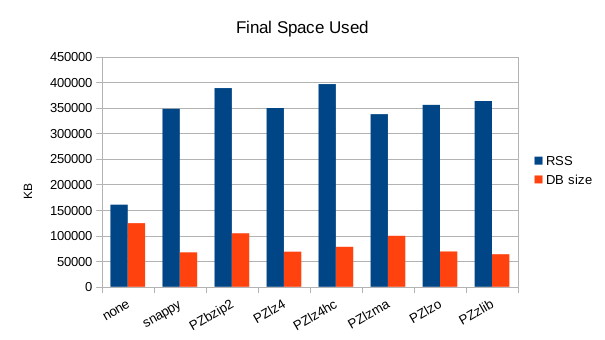

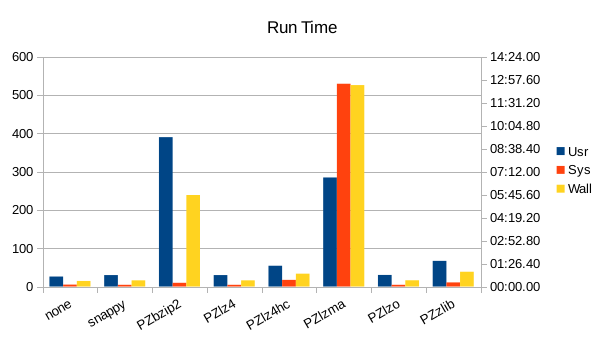

These charts show the final stats at the end of the run, after all compactions

completed. The RSS shows the maximum size of the process for a given run. The

times are the total User and System CPU time, and the total Wall Clock time to

run all of the test operations for a given compressor.

The huge amount of system CPU time in the lzma run indicates a lot of malloc

overhead in that library.

Files

The files used to perform these tests are all available for download.

The command script: cmd-level.sh.

Raw output: out.level.txt.

OpenOffice spreadsheet LevelDB.ods.