Compressor Microbenchmark: Basho LevelDB

Symas Corp., February 2015

Test Results

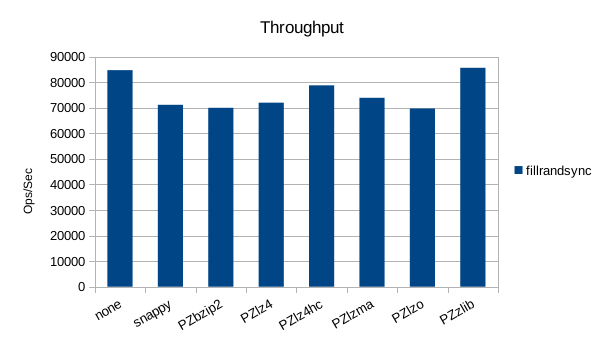

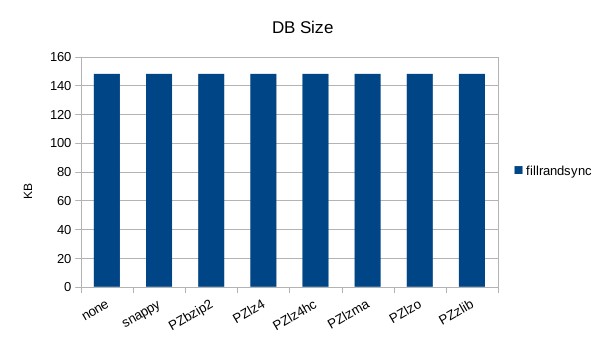

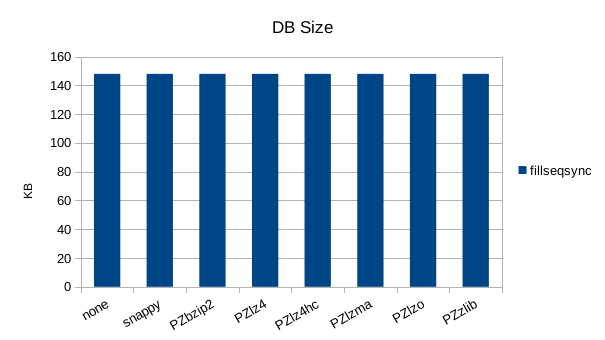

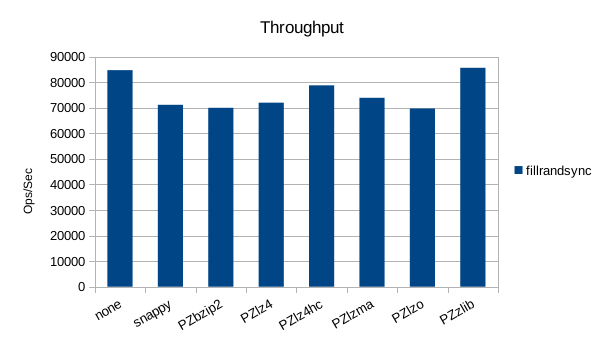

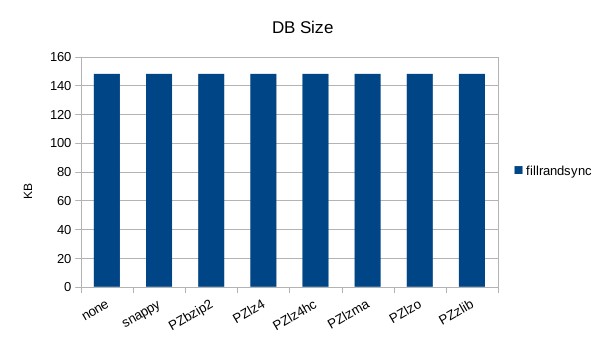

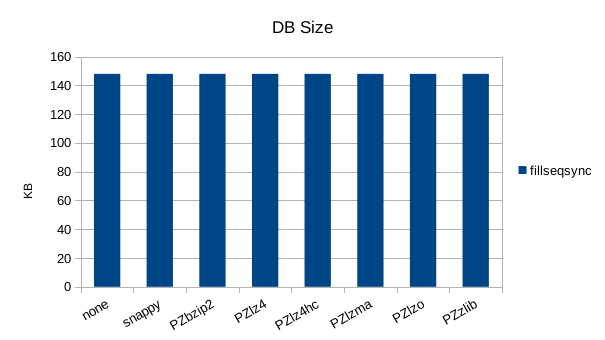

Synchronous Random Write

The synchronous tests only use 1000 records so there's not much to see here. 1000

records with 100 byte values and 16 byte keys should only occupy 110KB but the size

is consistently over 140KB here, showing a fixed amount of incompressible overhead

in the DB engine.

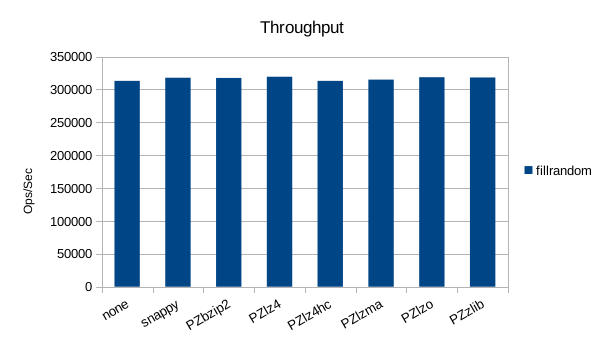

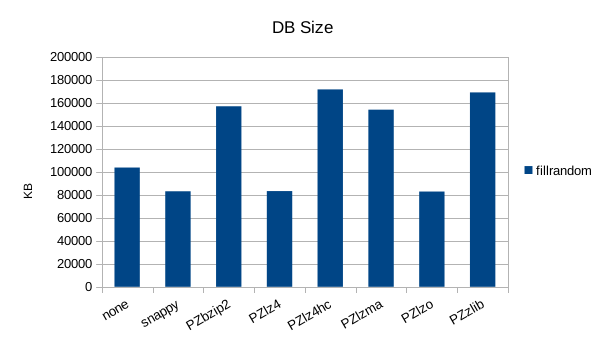

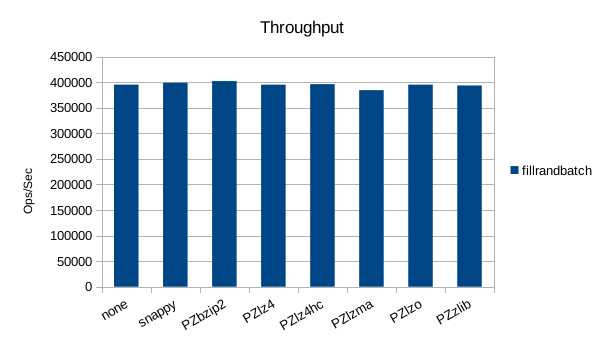

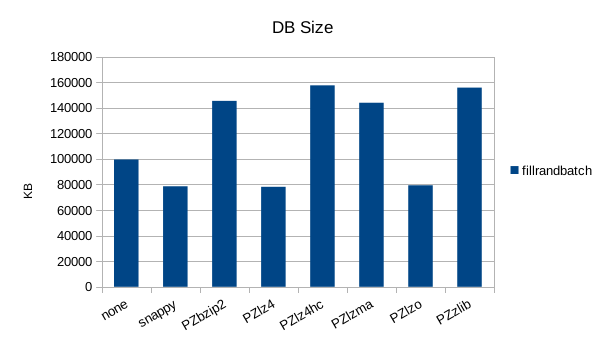

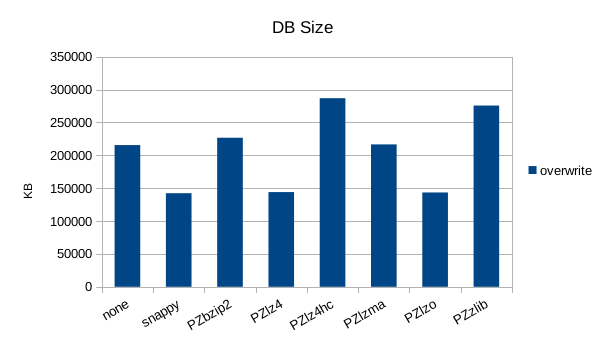

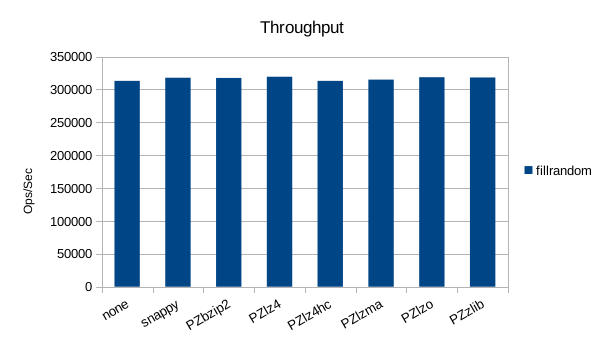

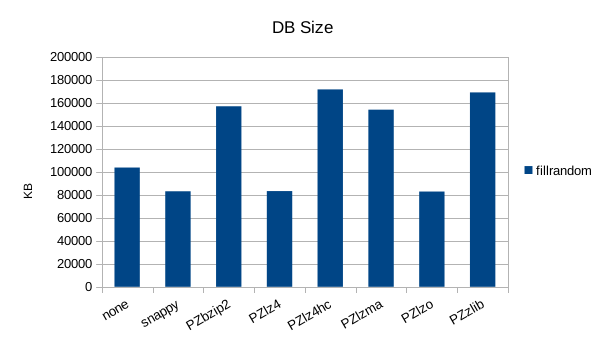

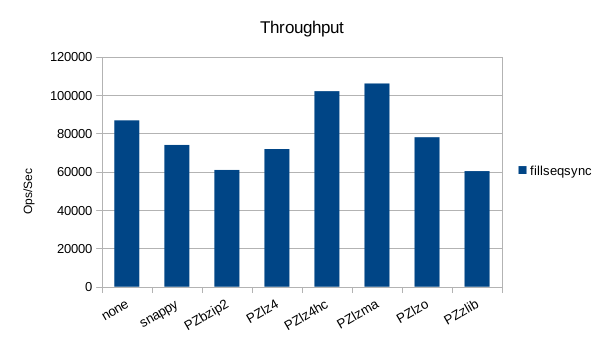

Random Write

The asynchronous tests use 1000000 records and, while showing no significant

difference in throughput, show widely varying results for the DB sizes. Basho goes

to great lengths to maintain constant write rates, which explains the relative

uniformity of the throughput for each compressor. What's unexpected is that

some of the compressors yield DBs much larger than the uncompressed case.

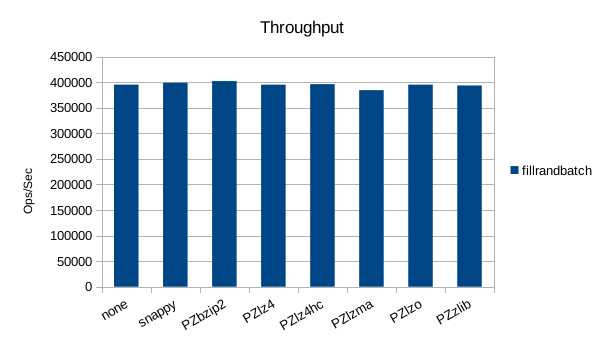

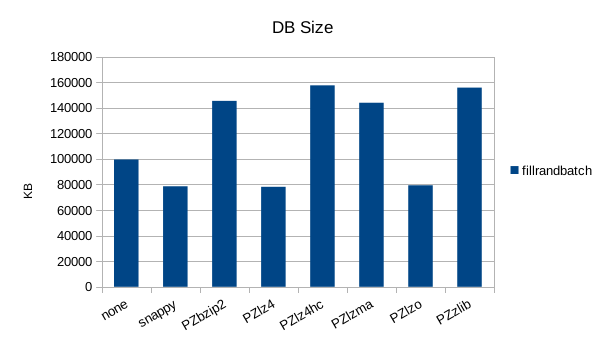

Random Batched Write

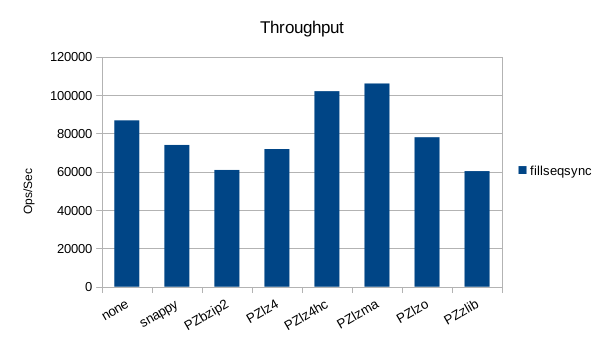

Synchronous Sequential Write

The synchronous tests only use 1000 records so there's not much to see here.

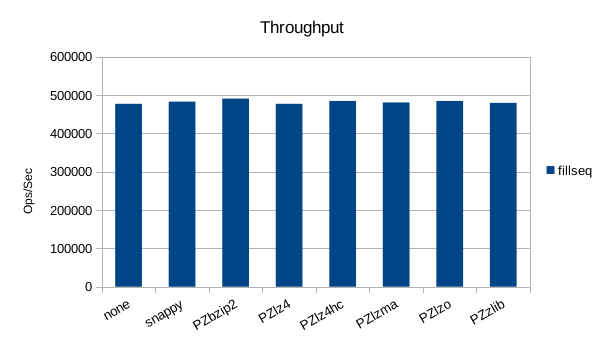

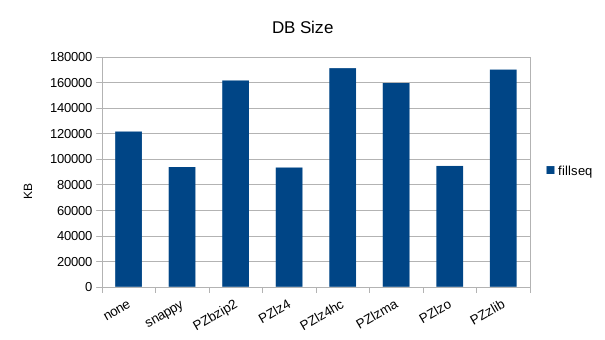

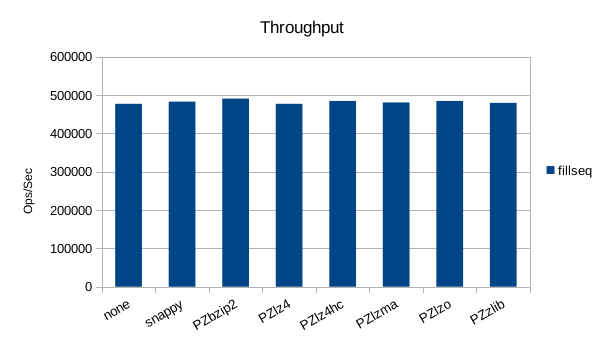

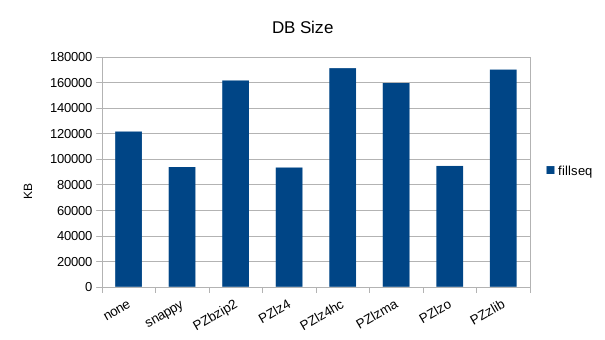

Sequential Write

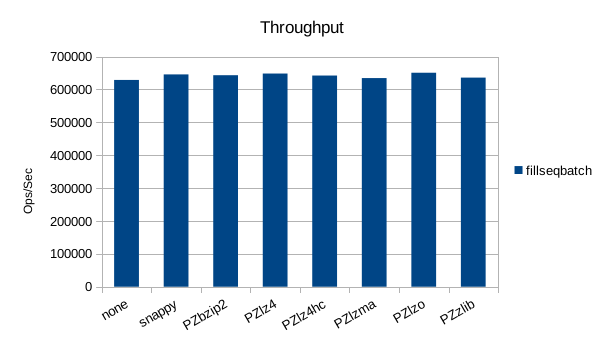

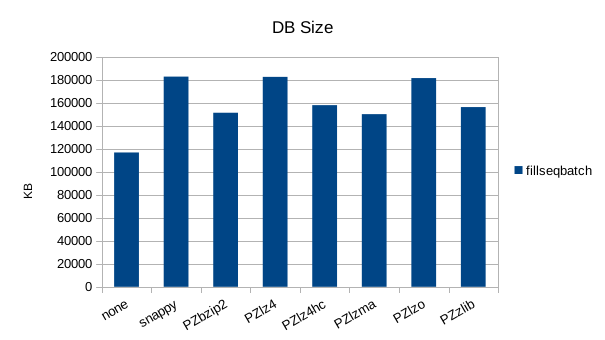

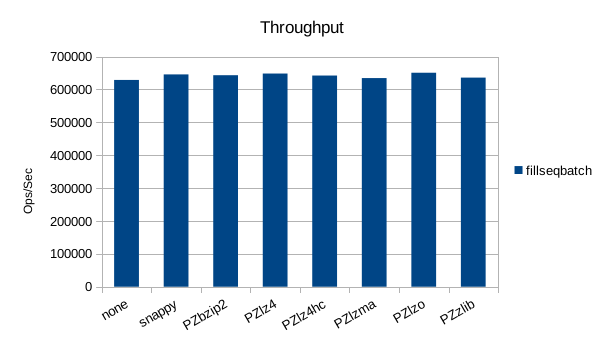

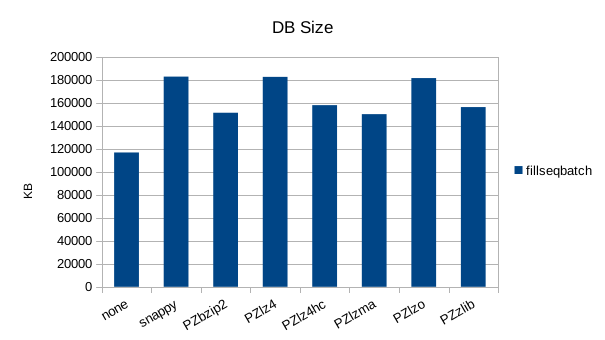

Sequential Batched Write

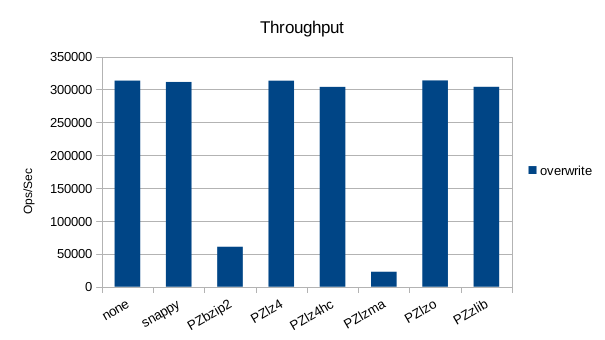

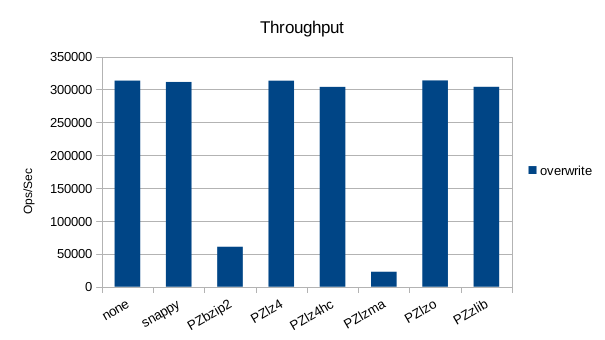

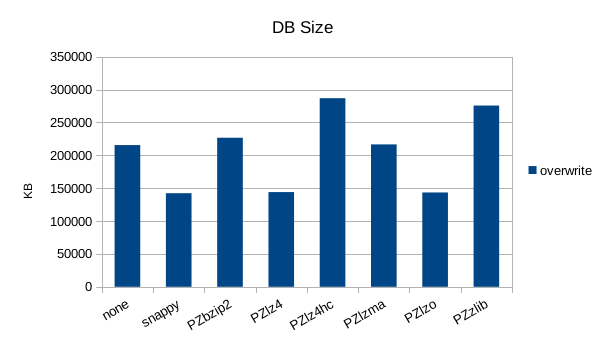

Random Overwrite

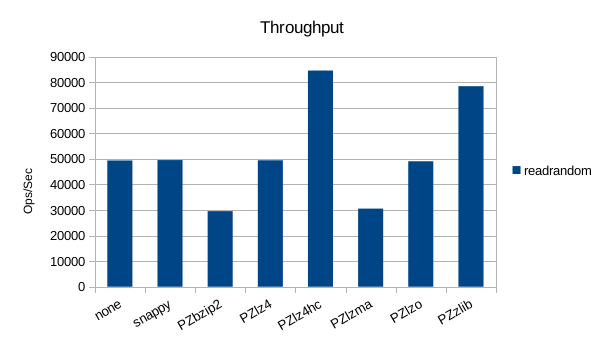

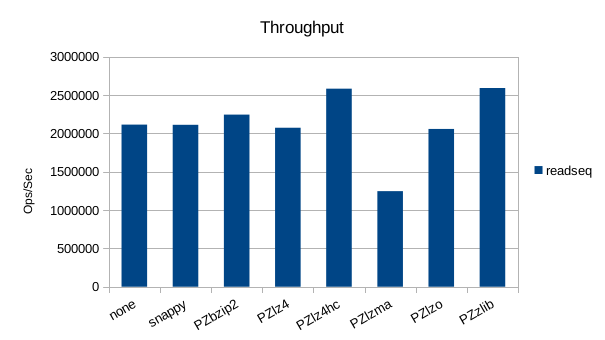

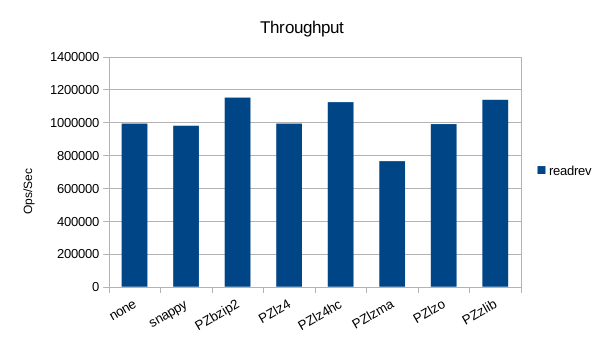

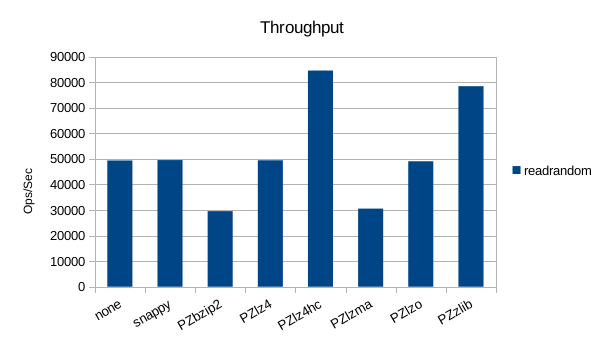

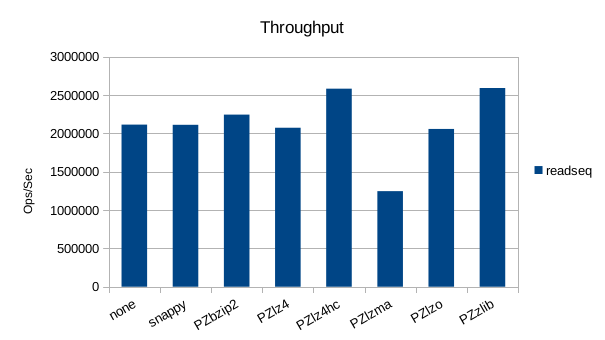

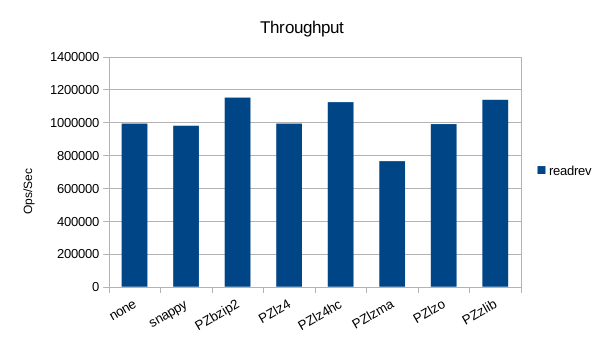

Read-Only Throughput

In the read-only tests we also find that using some of the compressors yields

faster throughput than the uncompressed case.

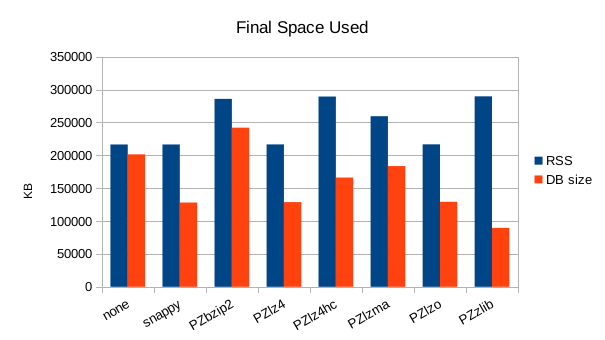

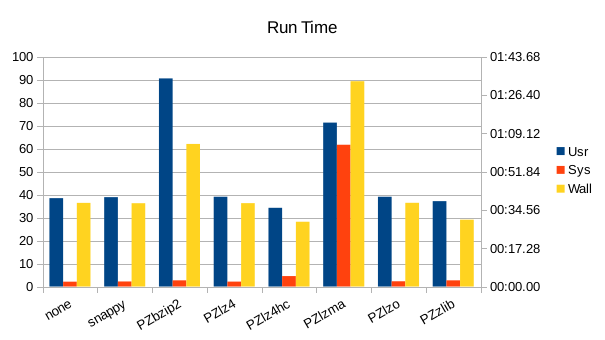

Summary

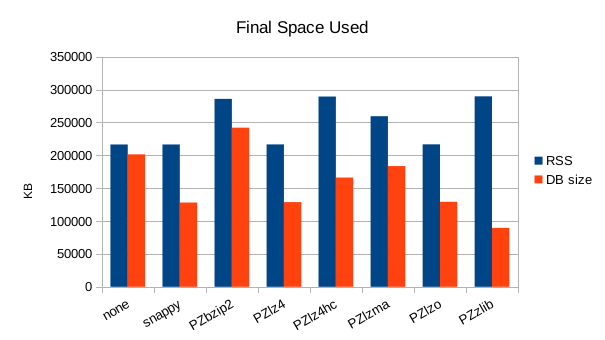

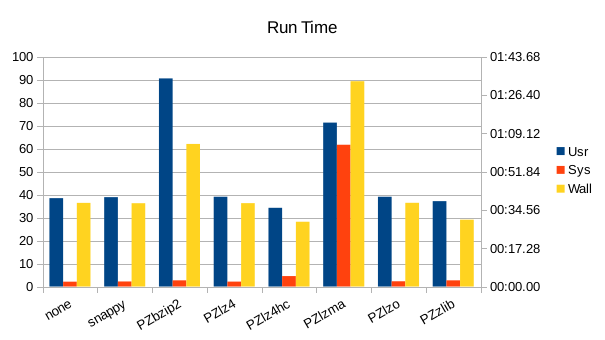

These charts show the final stats at the end of the run, after all compactions

completed. The RSS shows the maximum size of the process for a given run. The

times are the total User and System CPU time, and the total Wall Clock time to

run all of the test operations for a given compressor.

The huge amount of system CPU time in the lzma run indicates a lot of malloc

overhead in that library. At a cost of 50% more RAM usage, the test with zlib

yields both the smallest DB and the quickest runtime.

Files

The files used to perform these tests are all available for download.

The command script: cmd-basho.sh.

Raw output: out.basho.txt.

OpenOffice spreadsheet Basho.ods.